As part of the NWA FairNews project we have recently been working on the question of how to provide fair access to online news. And of course that question itself leads to a whole lot of other questions, such as when is something actually fair or biased or … and how can we measure this; how can we change existing algorithms to make them fairer …? These questions cannot be answered by one discipline alone; luckily, FairNews is a collaboration between political and communication science scholars based at the University of Amsterdam and computer scientists at TU Delft, allowing us to approach the problem from very different perspectives (as a researcher this is a lot of fun but achieving a common understanding is also always challenging in such circumstances!).

As one of our first big joint efforts, we have developed a simulation framework for understanding the effects of recommender systems in online news environments. This work was just accepted at the 2nd ACM Conference on Fairness, Accountability, and Transparency:

@inproceedings{Bountouridis2019SIREN,

author = {Bountouridis, Dimitrios and Harambam, Jaron and Makhortykh, Mykola and Marrero, Monica and Tintarev, Nava and Hauff, Claudia},

title = {SIREN: A Simulation Framework for Understanding the Effects of Recommender Systems in Online News Environments},

booktitle = {FAT* '19},

year = {2019}

}

In short

The growing volume of digital data stimulates the adoption of recommender systems in different socioeconomic domains, including ecommerce, music, and news industries. While news recommenders help consumers deal with information overload and increase their engagement and satisfaction, their use also raises an increasing number of societal concerns, such as Matthew effects, filter bubbles, and the overall lack of transparency.

We argue that focusing on transparency for content-providers is an under-explored avenue. As such, we designed a simulation framework called SIREN (SImulating Recommender Effects in online News environments), that allows news content providers to

- select and parameterize different recommenders; and

- analyze and visualize their effects with respect to two diversity metrics with little costs.

Taking the U.S. news media as a case study, we present an analysis on the recommender effects with respect to long-tail novelty and unexpectedness using SIREN. Our analysis offers a number of interesting findings, such as the similar potential of certain algorithmically simple (item-based k-Nearest Neighbour) and sophisticated strategies (based on Bayesian Personalized Ranking) to increase diversity over time.

Overall, we argue that simulating the effects of recommender systems can help content providers to make more informed decisions when choosing algorithmic recommenders, and as such can help mitigate the aforementioned societal concerns.

SIREN

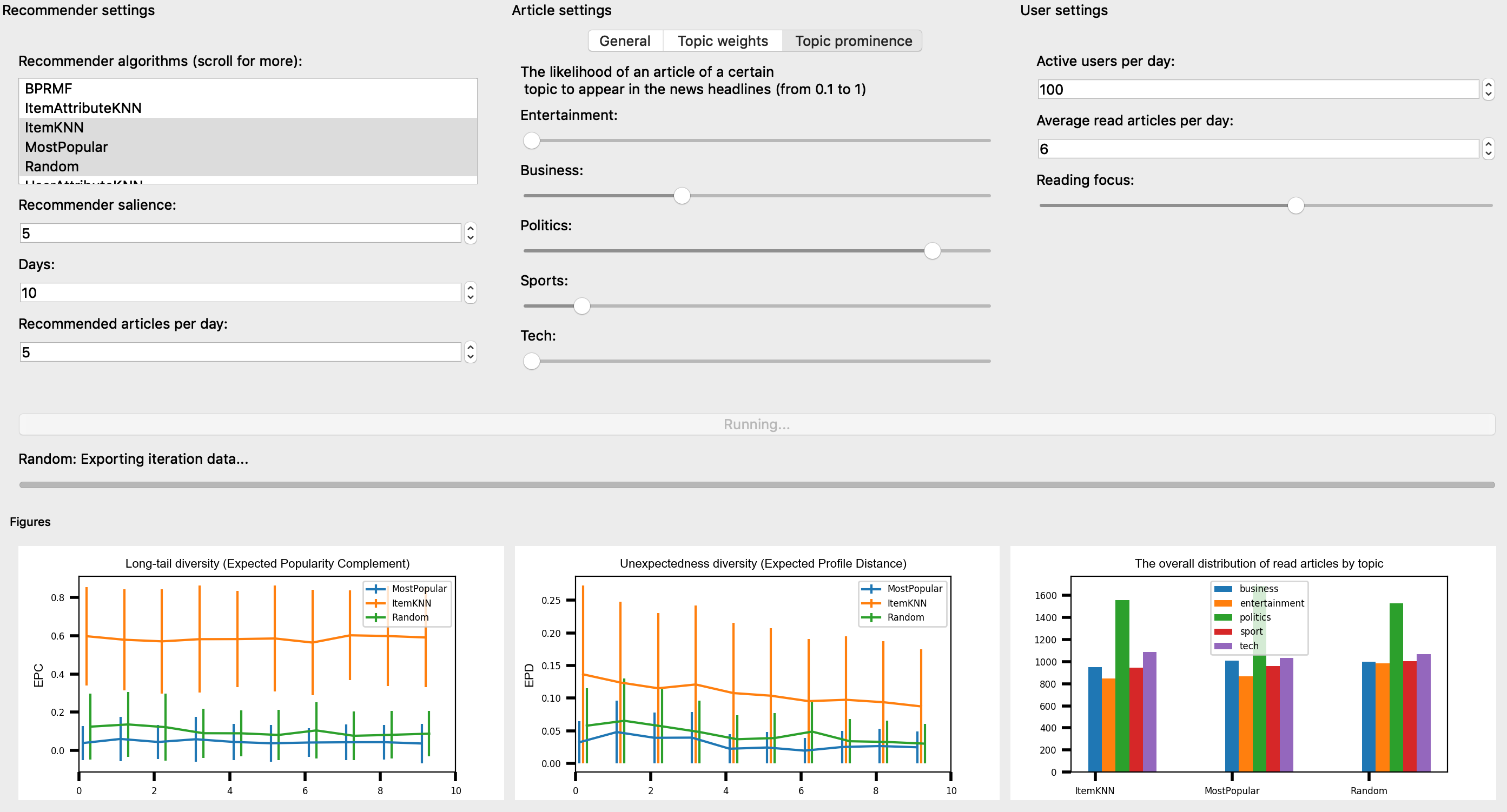

Let’s take a quick look at the UI: recommender settings, news article settings and user (i.e. news reader) settings can be adjusted at will. The bottom row shows the generated visualizations for different metrics and different recommender algorithms:

Adjusting recommender algorithm choices and settings allows a content provider to get an intuitive idea of the impact different settings have on its readers. The simulation is based on empirical data, and while a perfect correspondence to reality cannot be guaranteed, such simulations do provide clear insights into the tendencies recommender algorithms exhibit. As SIREN is open-source, it enables content providers to insert their own specifications and to test different recommender systems. By raising awareness of the consequences of deploying different algorithms, a concrete form of making algorithms more transparent, SIREN allows content providers to test the effects of the recommendation algorithms they have in mind with few resources.

Preprint and source code

Preprint and source code.